With the tragic deaths (in a taxi accident) of John Nash and his wife, people have been explaining Nash’s contributions  to the general public. The single best piece I’ve seen so far is this one by John Cassidy. However, even Cassidy’s piece doesn’t really make clear exactly how Nash’s famous equilibrium concept works. I’ll give some simple examples in the present post so that the layperson can understand just what Nash accomplished in his celebrated 27-page doctoral dissertation. (Be sure to look at his bibliography on the last page.)

to the general public. The single best piece I’ve seen so far is this one by John Cassidy. However, even Cassidy’s piece doesn’t really make clear exactly how Nash’s famous equilibrium concept works. I’ll give some simple examples in the present post so that the layperson can understand just what Nash accomplished in his celebrated 27-page doctoral dissertation. (Be sure to look at his bibliography on the last page.)

I have seen many commentators tell their readers that John Nash developed the theory of non-cooperative games, in (alleged) contrast to the work on cooperative games by John von Neumann and Oskar Morgenstern. However, it’s a bit misleading to talk in this way. It’s certainly true that von Neumann and Morgenstern (henceforth vNM) did a lot of work on cooperative games (which involve coalitions of players where the players in a coalition can make “joint” moves). But vNM also did pioneering work on non-cooperative games–games where there are no coalitions and every player chooses his own strategy to serve his own payoff. However, vNM only studied the special case of 2-person, zero-sum games. (A zero-sum game is one in which one player’s gain is exactly counterbalanced by the other player’s loss.) This actually covers a lot of what people have in mind when they think of a “game,” including chess, checkers, and card games (if only two people are playing).

The central result from the work of vNM was the minimax theorem. In a finite two-person zero-sum game, there is a value V for the game such that one player can guarantee himself a payoff of at least V while the other player can limit his losses to V. The name comes from the fact that each player thinks, “Given what I do, what will the other guy do to maximize his payoff in response? Now, having computed my opponent’s best-response for every strategy I might pick, I want to pick my own strategy to minimize that value.” Since we are dealing with a zero-sum game, each player does best for himself by minimizing the other guy’s payoff.

This was a pretty neat result. However, even though plenty of games–especially the ones we have in mind with the term “game”–are two-person zero-sum, there are many strategic interactions where this is not the case. This is where John Nash came in. He invented a solution concept that would work for the entire class of non-cooperative games–meaning those with n players and where the game could be negative-sum, zero-sum, or positive-sum. Then he showed the broad conditions under which his equilibrium would exist. (In other words, it would not have been as impressive or useful if Nash had defined an equilibrium concept for these games, if it rarely existed for a particular n-person positive-sum game.)

For every game we analyze in this framework, we need to specify the set of players, the set of pure strategies available to each player, and finally the payoff function which takes a profile of actual strategies from each player as the input and spits out the payoffs to each player in that scenario. (One of the mathematical complexities is that players are allowed to choose mixed strategies, in which they assign probabilities to their set of pure strategies. So technically, the payoff function for the game as a whole maps from every possible combination of each player’s mixed strategies onto the list of payoffs for each player in that particular outcome.) Now that I’ve given the framework, we can illustrate it with some simple games.

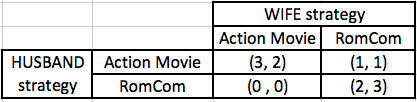

One popular game is the so-called Battle of the Sexes. The story is that a husband and wife have to go either to an event the husband prefers (let’s say it’s an action movie) or an event the wife prefers (let’s say it’s a romantic comedy). But, the catch is that each person would rather watch the movie with his or her spouse, than be alone, and this consideration trumps the choice of the movie. We can (start to) model this story in game theoretic form like this:

Set of players = {Husband, Wife}

Husband’s set of pure strategies = {Action, RomCom}

Wife’s set of pure strategies = {Action, RomCom}

Rather than formally define a payoff function, it’s easier to construct a matrix showing the payoffs to our players from the four possible combinations of their pure strategies, like this (where the husband’s payoff is the first number in each cell and the wife’s payoff comes after the comma):

Let’s make some observations about the above game. First, it’s isn’t a zero-sum game, so the minimax result doesn’t work. In other words, the husband wouldn’t want to approach this situation with the goal of harming the other person as much as possible.

However, the situation is strategic, in the sense that the payoff to each person depends not just on the strategy that person chooses, but also on the strategy the other person chooses. This is what makes game theory different from more conventional settings in economic theory. For example, in mainstream textbook micro, the consumer has a “given” budget and takes market prices as “given,” and then maximizes utility according to those constraints. The consumer doesn’t have to “get into the head” of the producer and worry about whether the producer will change prices/output based on the consumer’s buying decision.

Anyway, back to our “battle of the sexes” game above. Even though the game is positive-sum, there is still the “battle” element because the husband would prefer they both choose the action movie. That yields the best outcome possible for him (a payoff of 3) but only a 2 for the wife. The wife, in contrast, would prefer they both go to the romantic comedy, because she gets a 3 in that outcome (and 3 > 2). Yet to reiterate, they both prefer the other’s company, rather than seeing the preferred movie in isolation (i.e. 2 > 1). And of course, the worst possible outcome–where each gets a payoff of 0–occurs if for some crazy reason the husband watches the romantic comedy (by himself) while the wife watches the action movie (by herself).

In this game, there are two Nash equilibria in pure strategies. In other words, if we (right now, for simplicity) are only allowing the husband and wife to pick either of their two available pure strategies, then there are only two combinations that form a Nash equilibrium. Specifically, the strategy profiles of (Action Movie, Action Movie) and (RomCom, RomCom) both constitute Nash equilibria.

Formally, a Nash equilibrium is defined as a profile of strategies (possibly mixed) in which each player’s chosen strategy constitutes a best-response, given every other player’s chosen strategy in the particular profile.

We can test our two stipulated profiles to see that they are indeed Nash equilibria. First let’s test (Action Movie, Action Movie). If the husband picks “Action Movie” as his strategy, then the wife’s available payoffs are either a 2 (if she also plays “Action Movie”) or a 1 (if she plays “RomCom”). Since 2>1, the wife would want to play “Action Movie” given that her husband is playing “Action Movie.” So that checks. Now for the husband: Given that his wife is playing “Action Movie,” he can get a payoff of either 3 or 0. Since 3>0, he also does better by playing “Action Movie” than “RomCom,” given that his wife is playing “Action Movie.” So that checks. We just proved that (Action Movie, Action Movie) is a Nash equilibrium.

We’ll go quicker for the other stipulated Nash equilibrium of (RomCom, RomCom): If the husband picks “RomCom,” then the wife’s best response is “RomCom” because 3>0. So that checks. And if the wife picks “RomCom,” then the husband’s best response is “RomCom” because 2>1. So that checks, and since we’ve verified that each player is best responding to the other strategies in the profile of (RomCom, RomCom), the whole thing is a Nash equilibrium.

Now for one last example, to show the robustness of Nash’s contribution. There are some games where there is no Nash equilibrium in pure strategies. For example, consider this classic game:

Note that in this game, there is no Nash equilibrium in pure strategies. If Joe plays “Rock,” then Mary’s best response is “Paper.” But if Mary is playing “Paper,” Joe wouldn’t want to play “Rock.” (He would do better playing “Scissors.”) And so on, for the nine possible combinations of pure strategies.

Although there’s no Nash equilibrium in pure strategies, there exists one in mixed strategies. In other words, if we allow Joe and Mary to assign probabilities to each of their pure strategies, then we can find a Nash equilibrium in that broader profile. To cut to the chase, if each player randomly picks each of his or her pure strategies one-third of the time, then we have a Nash equilibrium in those two mixed strategies.

Let’s check our stipulated result. Given that Joe is equally mixing over “Rock,” “Paper,” and “Scissors,” Mary is actually indifferent between her three pure strategies. No matter which of the pure strategies she picks, the mathematical expectation of her payoff is 0. For example, if she picks “Paper” with 100% probability, then 1/3 of the time Joe plays “Rock” and Mary gets 1, 1/3 of the time Joe plays “Paper” and Mary gets 0, and 1/3 of the time Joe plays “Scissors” and Mary gets -1. So her expected payoff before she sees Joe’s actual play is (1/3 x 1) + (1/3 x 0) + (1/3 x [-1]) = (1/3) – (1/3) = 0. We could do a similar calculation for Mary playing “Rock” and “Scissors” against Joe’s stipulated mixed strategy of 1/3 weight on each of his pure strategies.

Therefore, since Mary gets an expected payoff of 0 by playing any of her pure strategies against Joe’s even mixture, any of them constitutes a “best response,” and moreover any linear weighting of them is also a best response. In particular, Mary would be perfectly happy to mix 1/3 on each of her strategies against Joe’s stipulated strategy, because that too would give her an expected payoff of 0 and she can’t do any better than that. (I’m skipping the step of actually doing the math to show that mixing over pure strategies that have the same expected payoff, gives the same expected payoff. But I’m hoping it’s intuitive to the reader that if Mary gets 0 from playing any of her pure strategies, then if she assigns probabilities to two or three of them, she also gets an expected payoff of 0.)

Thus far we’ve just done half of the work to check that our stipulated mixed strategy profile is indeed a Nash equilibrium. Specifically, we just verified that if Joe is mixing equally over his pure strategies, then Mary is content to mix equally over her pure strategies in response. It remains to do the opposite, namely, to verify that Joe is content to mix equally over his pure strategies, given that Mary is doing so. But since this game is perfectly symmetric, I hope the reader can see that we don’t have any more work; we would just be doing the mirror image of our above calculations.

To bring things full circle, and to avoid confusion, I should mention that von Neumann and Morgenstern’s framework could handle our Rock, Paper, Scissors game, since it is a two-person zero-sum game. Specifically, the value V of the game is 0. If Joe mixes equally over his pure strategies, then he can minimize Mary’s expected payoff from her best response to 0, and Joe can limit his expected losses to 0. (The reason I chose a two-person zero-sum game to illustrate a mixed strategy Nash equilibrium is that I wanted to keep things as simple as possible.)

Now that we’ve seen what a Nash equilibrium in mixed strategies looks like, I can relate Nash’s central result in his 27-page dissertation: Using a “fixed point theorem” from mathematics, Nash showed the general conditions under which we can prove that there exists at least one Nash equilibrium for a game. (Of course, Nash didn’t call his solution concept a “Nash equilibrium” in his dissertation, he called it an “equilibrium point.” The label “Nash equilibrium” came later from others.)

Oh, one last thing. Now that we know what Nash did at Princeton, can you appreciate how absurd the relevant scenes from the Ron Howard movie were?

When the movie’s Nash (played by Russell Crowe) tells his friends that they need to stop picking their approach to the ladies in terms of narrow self-interest, and instead figure out what the group as a whole needs to do in order to promote the interest of the group, that is arguably the exact opposite of the analysis in the real Nash’s doctoral dissertation. Indeed, if we analyzed the strategic environment of the bar in the way the movie Nash does so, the real Nash would say, “If all the guys could agree to ignore the pretty blonde woman and focus on her plainer friends, all the guys would be happier than if they each focused on the pretty blonde. But, that outcome doesn’t constitute a Nash equilibrium, so alas, we can’t expect it to work. If the rest of us focused on the plainer friends, we would each have an incentive to deviate and go after the pretty blonde. Ah, the limits of rational, self-interested behavior.”

(I hope the reader will forgive the possibly sexist overtones of the preceding paragraph, but it’s how Ron Howard chose to convey Nash’s insights to the world. I am playing the hand I was dealt.)

Source: https://web.archive.org/web/20161215084741/https://www.mises.ca/john-nashs-equilibrium-concept-in-game-theory/